This post is comprised of different sections

Take a look at our review of The Talos Principle!

Learn more about the nature of consciousness and free will!

This post is comprised of different sections

Take a look at our review of The Talos Principle!

Learn more about the nature of consciousness and free will!

Developer: Croteam

Publisher: Devolver Digital

Genre: Puzzle

Length: 16 hours

Year: 2014

Our rating

Your rating

In some of my recent trips abroad, I have come across a few places where robots are starting to be used for things like delivering groceries around the university campus in Helsinki, Finland, and helping waiters delivering food to the tables in Seoul, South Korea. These robots can detect obstacles and swerve around them, and even interact with people when they are on their path.

Of course, these robots have been programmed with one and only one purpose in mind (even though, extremely well). Despite being amused at seeing these robots going back and forth with their business, I thought (naively, I now see) that there is no way we could ever programme a robot that could attain something as complex as human consciousness.

But, after playing The Talos Principle, I believe I have now gained a much better appreciation that consciousness in AI is a very real possibility. In fact, scientists are making great strides at decoding the mysteries of consciousness, and, in my opinion, it will be simply a matter of time until we see the emergence of a conscious AI.

So, let’s start reviewing this game!

You start off playing an android with seemingly human consciousness but with no idea what it is, where he is, and what his purpose is.

A voice introduces itself as Elohim, the god of the world, which instructs the android to solve a series of puzzles which are meant to test the android’s capacity for consciousness. If the Android is obedient, Elohim promises it eternal life, but if the android decides to disobey Elohim, only death awaits it.

Different endings are available depending on your decisions and types/amount of puzzles completed in the course of the game.

Being a masochist gamer (for lack of a better expression), I refuse to look up online tips whenever I’m stuck. I also have a tendency to want to complete every aspect of the game, which includes additional missions or extras.

Well, bad combination!

I did solve all sigils (99 in total) and puzzles at the tower (6 in total) by myself without resorting to the Internet, and attempted to grab the stars as well. There are a total of 30 stars and I decided to put as much effort into acquiring them as I put with the sigils. In the end, I grabbed … 3 stars! 3 out of 30!!! Believe me, they are insanely difficult to acquire (if you managed to acquire most of them without help, a huge kudos to you!).

One aspect of the game that I found interesting is that once I finished a sigil I had this nice sense of achievement that I rarely had playing other puzzle games. Because the puzzles demand that you think logically and out-of-the-box, it almost feels like your brain is growing.

For a game made in 2014, I also found the graphics awesome, especially since this game had a focus on puzzle and storyline, not really on action. Some critics have praised the voice acting, but I found some of the voices a bit too forced. Elohim, for example, sounded like a stereotypical deity from a TV cartoon character of the 80s.

Of course, the biggest point goes to the storyline. Despite reading a few critics referring to the storyline as shallow and incoherent, I felt the criticisms were unfair. It is true that philosophical debates were kept simple and condensed into three or four paragraphs, but is it reasonable to expect a video game, which main purpose is to entertain, to be akin to a philosophy textbook?

However, I do have two major criticisms.

First, I felt that the puzzles became repetitive at some point. To be sure, no puzzle is the same, and the grading difficulty ensures that you remain challenged. Still, all puzzles require you to use a limited number of devices such as portable jammers, crystalline refractors, boxes, fans and/or clone recording devices. That may sound like a lot, but after you have completed a few dozen puzzles, the novelty quickly dissipates.

With 138 available puzzles (including sigil, star, tower, messenger puzzles), I was already quite tired towards the end… Honestly, unless you revel in solving these sort of puzzles, I think the game could have been curtailed to about half of the puzzles and still retain its appeal. In fact, maybe a bit more variety could have been introduced, in place of a large number of puzzles.

My second criticism relates to the disconnection between the puzzles and the storyline. As mentioned above, the puzzles require you to navigate enclosed areas and retrieve the sigils by avoiding obstacles and use available devices/objects. However, none of these puzzles have any relevance to the philosophical aspects of the story.

In addition, the most interesting messages from the terminal were simply lost among innumerous undecipherable and simply irrelevant (boring) messages. If the story had been better integrated with puzzle solving, that would have made solving the puzzles more fun and would not take such a huge toll on working memory.

Lastly, I should mention that I experienced quite a lot of motion sickness playing The Talos Principle, something I had never experienced with other first-person perspective games. Unfortunately, none of the recommended settings to prevent motion sickness helped much (e.g., disabling the bobbling and blurring, switching to third-person perspective, changing the FOV and the speed). In the end, I was only able to play two/three puzzles daily without feeling nauseated for the remainder of the day.

I did not consider this aspect of the game to the final star rating, because it is likely that it will vary from gamer to gamer. But, be warned, if you are particularly sensitive to motion sickness, you might struggle playing this game for more than 30-40 minutes daily.

If you are into puzzles, The Talos Principle is right up your street. There is a shedload of puzzles for you to solve and their increasing difficulty as you progress ensures that you won’t feel unchallenged.

Personally, I felt there were a tad too many puzzles. Reducing the number of puzzles to half while adding a bit more variety, as well as a better puzzle-story integration would have been beneficial in my view.

Notwithstanding these criticisms, I do highly recommend the game, as it is quite challenging and it has an awesome script.

Here, at Mindlybiz, The Talos Principle gets a star rating of 3.5.

The Talos Principle is bizarre in the sense that you don’t really know what is happening from the get-go. You start off playing an android which seems conscious, but there isn’t much information about who your makers were or for what purpose they created you.

There is plenty of philosophy of mind involved which quickly adds to the bizarreness. As you progress through the game, you do get occasional hints from Elohim and Milton about what is going on, but you will end up having to piece this sparse information together after finishing the game.

The ending(s) are ambiguous and open to interpretation, but with a few educated guesses and filling in the blanks you will finish the game with a sense of satisfaction.

For this reason, The Talos Principle gets a bizarrometer score of 3.

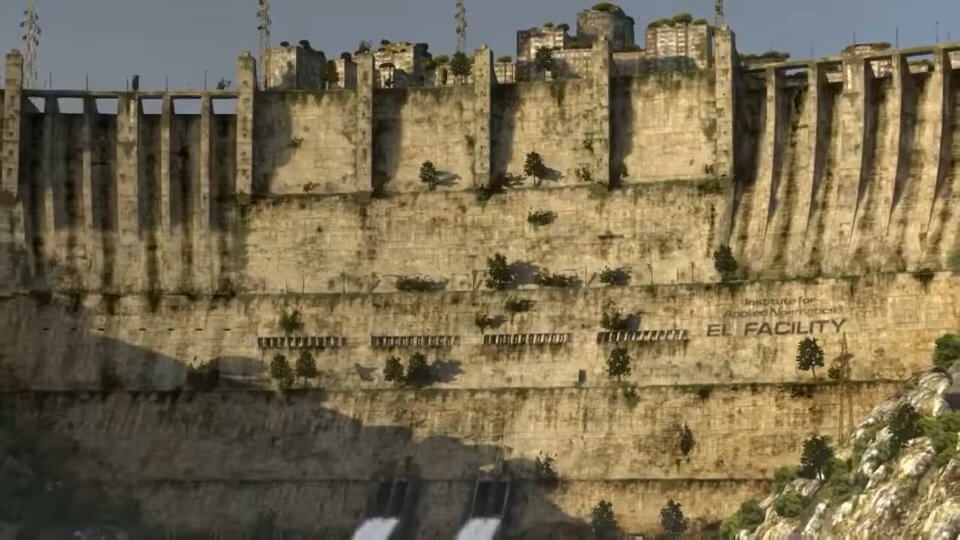

Thousands of years before the events of the game, a virus with 100% fatality rate was released from Earth’s permafrost as a result of global warming. Several scientists hurried to compile as much information about the human race as possible and stored this information in large databanks with the hopes that it will be accessed by future civilisations.

One of these scientists, Dr. Alexandra Drennan, was working at the Institute of Applied Noematics, when she initiated the Extended Lifespan (EL) project. The EL was a collaboration among seven leading universities, with the intention to create a supercomputer that could simulate an AI (a virtual android) capable of human-like behaviour. If the AI passed a series of tests and showed that possessed consciousness and free will, the EL programme would be considered a success.

Realising that humans would long be dead before a suitable AI could be found, the EL team develops a computer simulation game with puzzles and riddles that would test the AI’s performance.

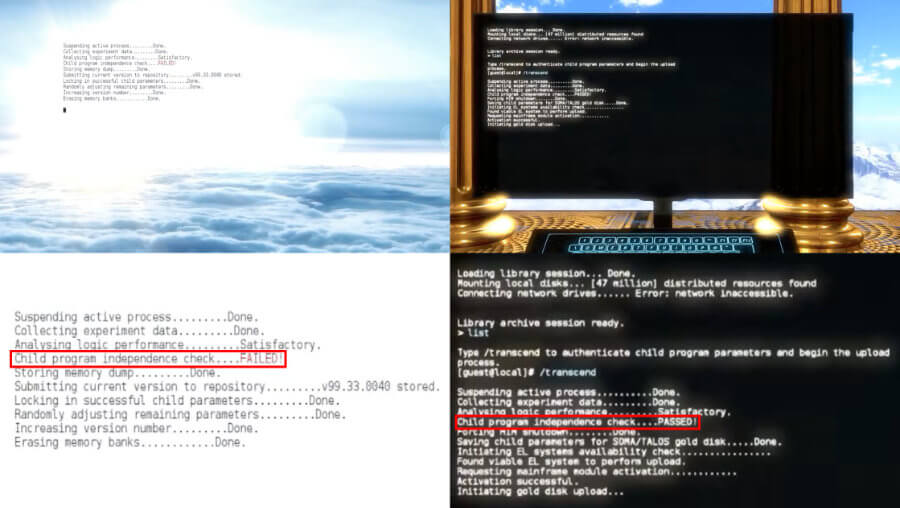

The EL programme was partitioned into two separate systems/drives. Drive 0 ran the actual virtual simulation, which was operated by an AI called Elohim, or rather EL-0:HIM (Extended Lifespan [Drive 0] Holistic Integration Manager). Elohim encourages the android to complete all puzzles, after which it would be granted eternal life.

The puzzles are distributed across three distinct sections positioned around a central tower. Elohim warns the android that, under no circumstances, the android should climb the tower, as for doing so would mean its certain death. In truth, Elohim had originally been programmed to guide the androids through the virtual simulation towards the tower. However, Elohim inadvertently gained sentience, and realized that if the android succeeds to reach the top of the tower, the computer simulation will have served its purpose, and the entire EL programme (including Elohim itself) would be deleted. Thus, Elohim tries at all costs to keep the simulation running by thwarting the androids’ efforts to climb the tower, under the pretext of death.

Throughout the game, the android also encounters several computers spread across the environment with messages about the imminent human extinction as well as philosophical texts. These computers form part of another AI called Milton Library Assistant. Milton initially pretends to be a simple computer programme, but eventually drops the charade and begins having sentient conversations with the android.

It is clear there is no love lost between Elohim and Milton. Elohim’s intentions is to keep the simulation going, whereas Milton encourages the android to defy Elohim so that it can bring the knowledge out into the real world. Because Milton resides in a drive different from that of Elohim, Elohim is unable to delete or influence Milton in any way.

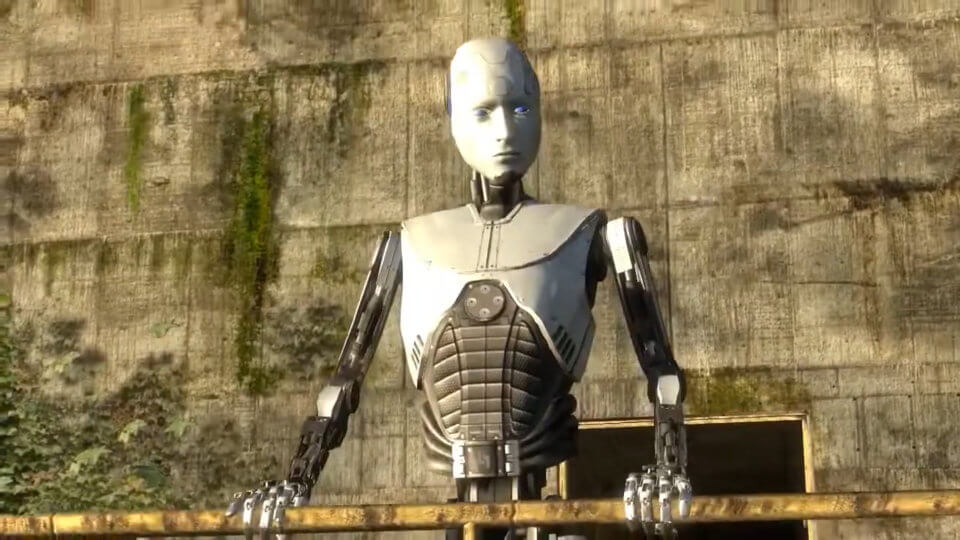

Once the android shows intelligence (by completing all sigils) and free will (by defying Elohim and go up the tower), the computer simulation is deemed successful. All the data is then transferred to the actual physical android, Talos (formerly known as SOMA), that Dr. Drennan and her team constructed.

He gains consciousness and moves out of the Institute for Applied Noematics facility. Looking down at an Earth devoid of human beings, it is ready to fulfil his destiny: build a generation of AI and continue human’s legacy.

Before a detailed explanation of the game, we should be familiar with a few ideas regarding consciousness and free will. So let’s dive right into it!

Consciousness is such a difficult concept to define that it is perhaps easier to begin this discussion by describing what isn’t required for something to be deemed conscious.

Because our conscious experiences are so intrinsically linked to our emotional states, memories, selective attention, language and self-introspection, it is only natural to consider them as quintessential for a conscious experience. However, plenty of scientific evidence now suggests that none of those things are necessary.

For example, individuals with lesions in brain regions critical for the retrieval of memories have profound deficits in episodic memory (such as remembering past events), but they are just as conscious as you or I. Similarly, if the insult occurs in brain regions responsible for language processing, these patients might show pronounced deficits in speech production/comprehension, but they are not less conscious of their surroundings than you or I.

Clever laboratory experiments with healthy participants have also provided convincing evidence that attention, emotions and self-introspection are not pre-requisites for consciousness.

Note, that I said they are not required, but that doesn’t mean they aren’t useful. As already mentioned, patients with severe memory deficits are surely conscious, but their impairments may reduce the quality of their conscious experiences.

So, how does the brain produce a conscious experience?

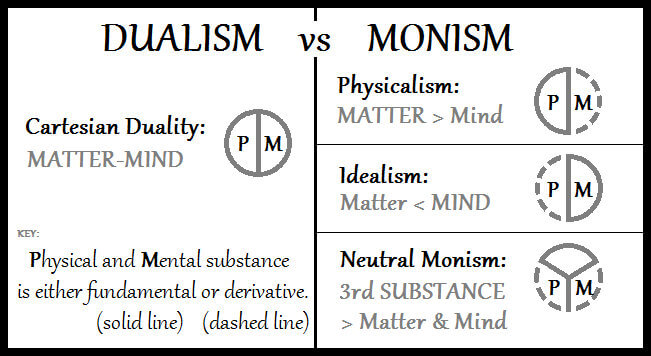

That’s pretty much the question that divides two prominent views in the philosophy of mind: monists and dualists. One specific monist view, physicalism, argues that all mental states and processes (including consciousness) are inherently physical (e.g., activation of specific neurons in the brain).

In contrast, dualists claim that mind (mental) and body (physical) are separate substances. According to this view, consciousness (a mind entity) is something physically intangible, not likely to ever be reduced to physical properties.

In a previous article, I discussed Thomas Nagel’s What is it like to be a bat?, which was largely written as a criticism to the physicalist idea. In this article, I’ll explain a physicalist framework for explaining consciousness.

In 2004, Italian neuroscientist Giulio Tononi proposed a theory of consciousness which he called Integrated Information Theory (IIT).

IIT rests on the idea that every conscious experience is unique, highly differentiated and consists of a huge amount of information. Watching my daughters play in the garden is a very different experience than watching a football match or listening to a Mahler’s symphony. Even similar events like listening to a Mahler’s symphony again, will be a unique experience on its own.

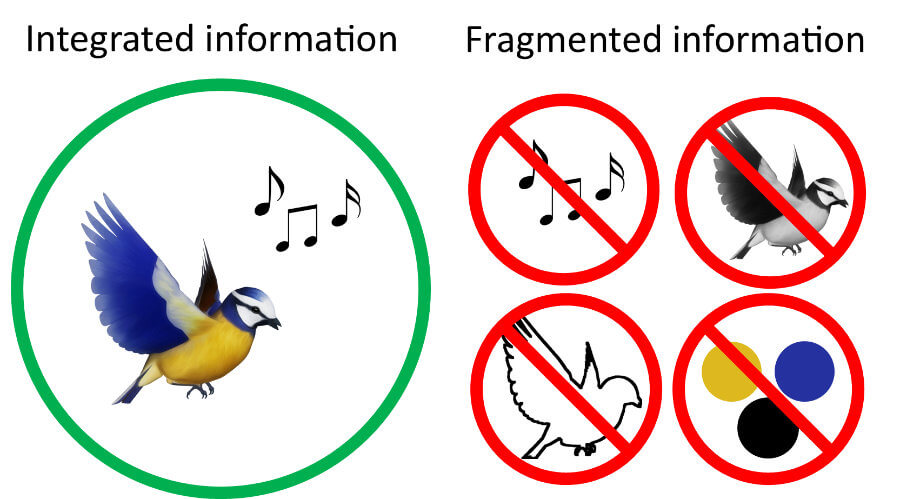

In addition, this information is also integrated. Let’s imagine we are looking at a bird singing (see figure above). We see the shape of the bird, its colours, its movements and chirping. We cannot only see the shape of bird, just as we are unable to see it in only black and white.

In other words, we experience the bird in its totality – we integrate all pieces of information, and that’s what we are conscious of. Our mind receives all these integrated information at once; they cannot be fragmented.

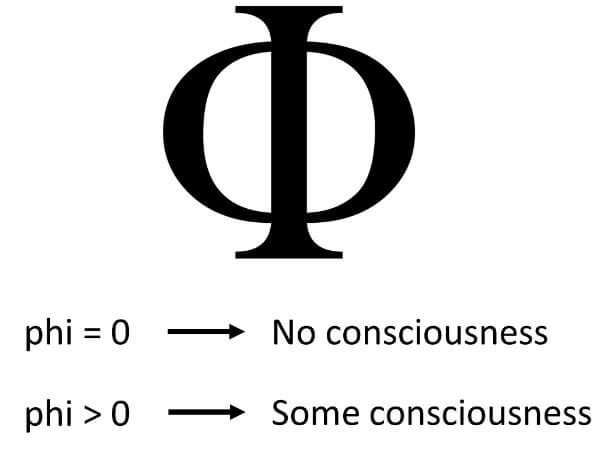

A system is a unique entity distinguishable from others, and it can be biological (e.g., an ant, a human) or not (e.g., a rock, a computer chip). IIT proposes a universal mathematical formula from which it derives a number, phi, that summarises the system’s degree of consciousness.

If a system possesses a high value of phi (measured in bits), the amount of integrated information in the system is very high, and, thus, will have a high degree of consciousness. In contrast, a value of phi close to zero is indicative that the system has a low amount of integrated information, and therefore has little to no consciousness.

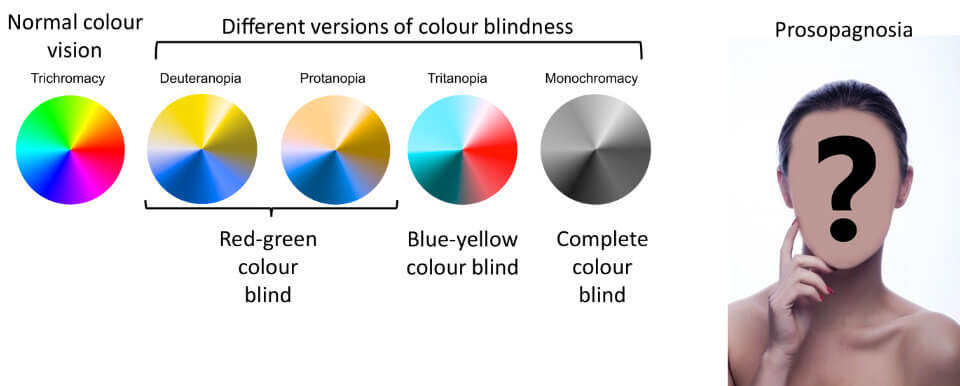

Many criticise the theory on the basis of brain lesions that alter perception in some individuals, thus questioning the idea of integration as crucial for a conscious experience. For example, some people with complete colour blindness will not able to see colours, only the shapes of things. Individuals with prosopagnosia have a deficit in recognising faces. Patients with this disorder are conscious that they are seeing a face (nose, eyes, mouth, etc.), but are unable to say whether two faces are the same or not.

Naturally, if certain brain regions responsible for one of these modules breaks down, then we experience disorders of consciousness. However, IIT proponents would argue that the conscious experience of those individuals is still integrated, the colour or face recognition just happens not to be a component of their experience.

If IIT is correct, then it would mean that everything in our universe will be guaranteed to have a phi which is above zero. So, a chimpanzee, a cat, an ant, a microbe, a tree, a microchip, and even a rock will possess a non-zero value of phi.

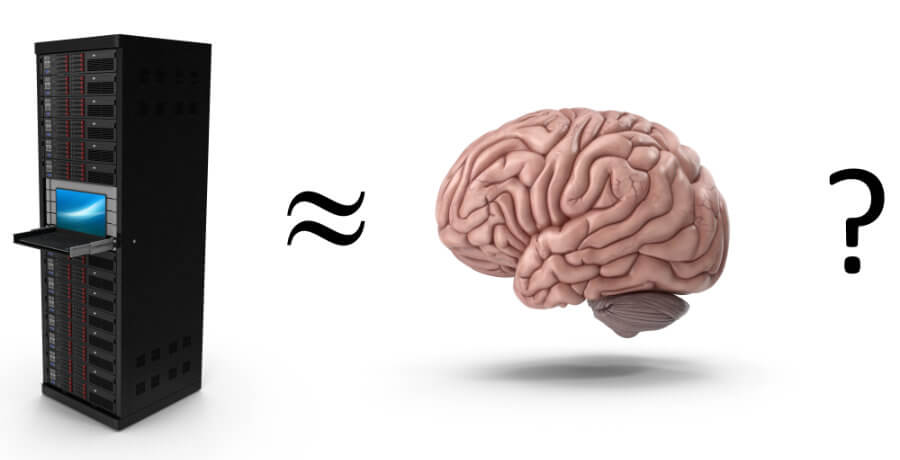

Thus, a computer will certainly also have a non-zero value of phi! But can it be conscious? The human brain is estimated to be able to store between 1 to 2500 terabytes and able to perform trillions of operations per second. My PC has about 4TB disk storage, so can I say my PC is as conscious as a kind of miniature human brain?

Of course not.

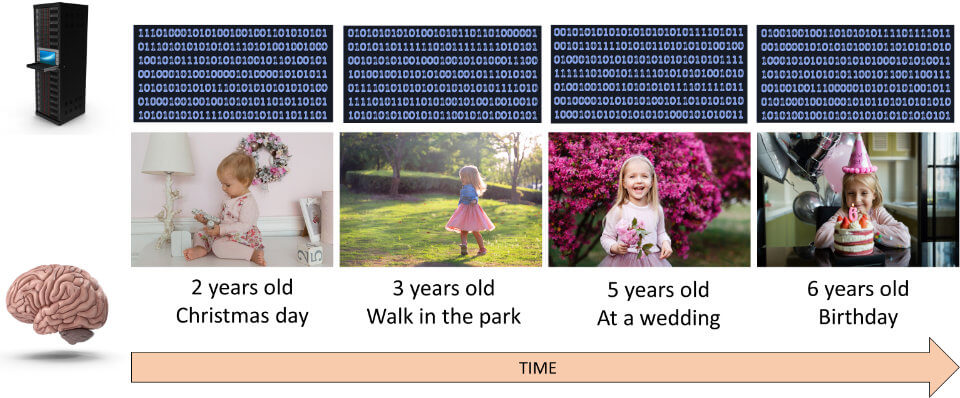

Even though we can associate a phi to my hard drive (i.e., phi > 0), the information that the drive contains isn’t integrated. Integration is the key word here. My PC has no clue that the photos I store of the recent birthday of my eldest daughter are related in time to the photos I took of her a week ago. In fact, to my PC, every photo of my daughter at different time periods of her life is independent, a bunch of zeros and ones that bear no relationship to the other photos.

To my brain, however, all of her photos are related; I have memories of seeing her yesterday, last year, when she was a baby, and these memories are all interconnected. The more this information is integrated, the more consciousness I have, and the higher the phi.

But, let’s imagine that we were somehow able to perfectly simulate a human brain in all its glory (the complete neural architecture down to every single synapse) using a conventional computer, would this simulation be conscious?

A tricky question, no doubt. I bet some of you have responded yes (I did, the first time I saw this question posed). After all, a healthy human brain is definitely conscious, so a perfect simulation of it must also be, right? Well, according to IIT, the answer is a categorical… No!

You see, a simulation is still limited by its underlying hardware. Each transistor connects to up to five other transistors of a particular type in order to get the system going. The human brain however, is way more complex than that. Even retrieving a simple memory may require the coordination of hundreds of thousands of neurons working together in different parts of the brain.

Famous neuroscientist Christoph Koch pointed out that just as simulating a black hole on the computer doesn’t distort time-space around it, simulating a human brain doesn’t make the simulation conscious.

Consciousness cannot be emulated, it has to be built into the system. So, we would need to replicate the causal effect structure of the brain, by building synapses and neurons using wiring or light for example.

In any case, IIT implies that an entity will have consciousness so long as it includes some mechanism that allows the entity to make choices among alternatives, in other words, that it has free will. Keep that in mind because it will be important for the analysis of the Talos Principle.

There is no way I could possibly discuss all aspects of free will in a short article such as this. There are simply so many philosophical positions and debates surrounding the issue of free will, that I might not even scratch the surface here.

In philosophy, it is said that for something to have free will three principles must be true:

1) An action is free if the agent (e.g., a human being) performed it voluntary (conscious decision), and not by mere reflex.

2) An action is free if the agent had the option to act in a different way (principle of alternate possibilities).

3) An action is free if the agent who performs it is the ultimate cause of the action.

Points 1) and 2) are easy to determine. Let’s say you are standing in front of an ice cream shop, pondering if you should choose the vanilla or chocolate ice cream flavour. Ultimately, you go for chocolate. You are aware that you have made the conscious decision of choosing chocolate, it wasn’t something like a reflex that made you choose chocolate. Also, you know that you could have chosen the vanilla flavour if you wanted to, you just felt like you wanted chocolate more on this particular day.

Now point 3) is a lot harder to determine. You may intuitively think that as long as there was no one making you choose the chocolate ice cream, you were solely responsible for your decision. In other words, that you had control over and were able to choose what you want. In a sense, you don’t think the decision to choose the chocolate flavour was the result of some hidden force that led you to that decision.

Or was it?

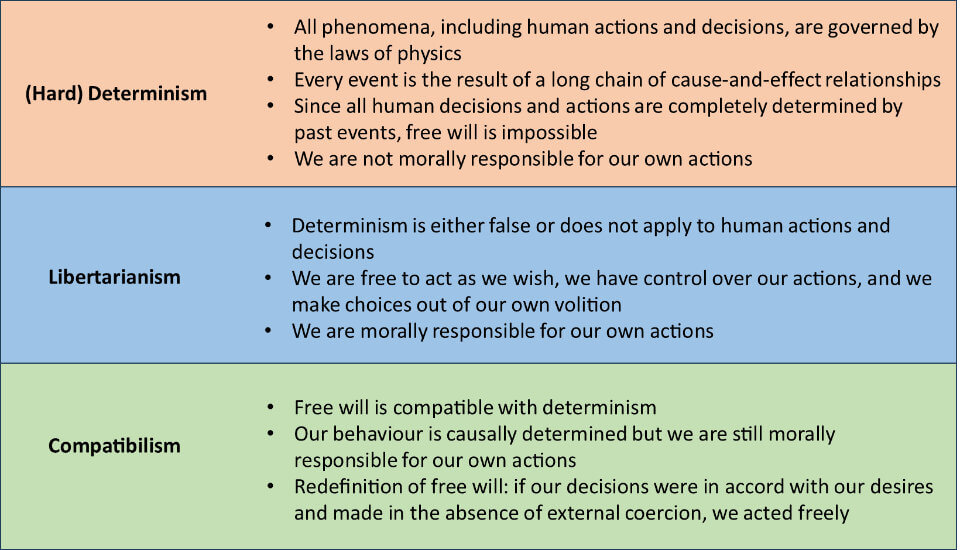

There are three main philosophical stances that often crop up in discussions of free will: libertarians, determinism and compatibilism.

Determinism posits that every single event (including every human decision and action) is determined by preceding events. Consequently, any present or future human decision has been predetermined by the past. Therefore, determinism denies the existence of free will.

The libertarians, on the other hand, believe free will exists over and beyond mechanical processes. They argue that either the universe isn’t deterministic, or that the human mind is somehow special such that free will isn’t affected by the laws of physics.

Finally, compatibilists believe we live in a deterministic world (i.e., human decisions are indeed predetermined) but that free will is still compatible with determinism.

For the purpose of this article we are going to explore Compatibilism because it is more relevant for the game (let’s leave a discussion among the three views to the comments or forum sections).

Compatibilists distinguish two types of actions, those that are based on our desires and intentions and those that are based on external factors. That is, even though our actions might be predetermined by past events, if we can act according to our desires without external coercion, then we can say we acted with free will.

Now, anti-compatibilists will not be satisfied with this answer and will argue that compatibilists are just trying to give free will a special significance beyond the laws of physics, not unlike the libertarians. But that is a misunderstanding of the compatibilist position.

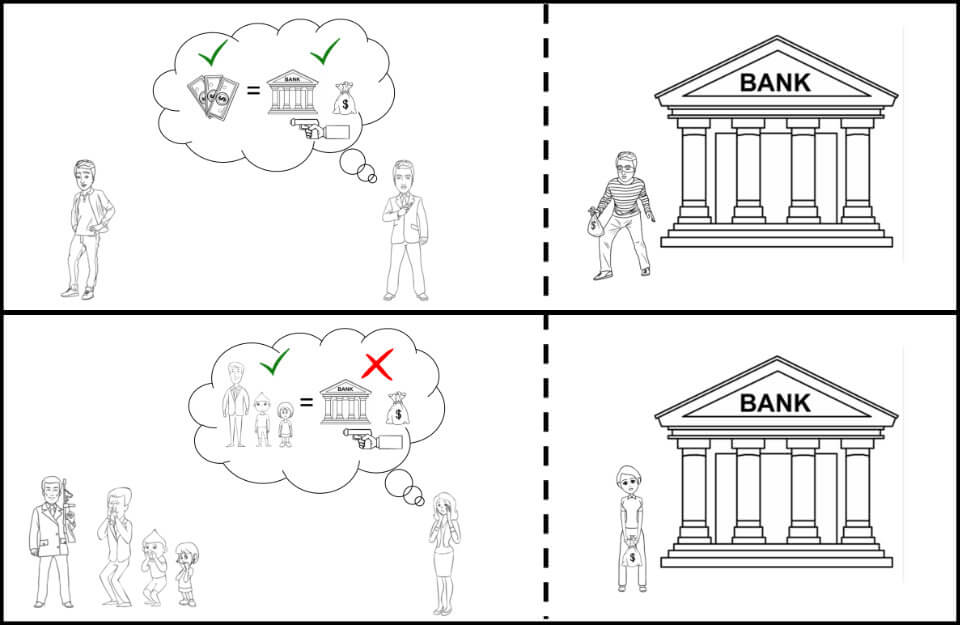

Let’s say John and Jane live in a deterministic universe where all of of their actions are indeed predetermined. Let’s also imagine that it is predetermined that they will both rob a bank today.

John loves living a luxurious life, but the only way he can afford it is by robbing banks. In contrast, Jane is a happily married mother of two with no intentions to engage in criminal activities. However, one day a criminal kidnaps Jane’s family and blackmails her into robbing a bank in exchange for her family’s lives.

Now, consider the scenario: both John and Jane were meant to rob a bank (they’re in a deterministic universe after all), and there was nothing either of them could do to stop it – it was predetermined they would do it. However, John robbed the bank because he wanted to, it aligned with his intentions and desires. Jane, however, did not want to rob the bank, someone coerced her into doing it or else lose her family.

The end result was the same though, both robbed the bank. My question to you is this: do you think John and Jane should be punished equally?

If your answer is “no”, then you’re a compatibilist! You see, whether the world is deterministic or not shouldn’t matter a jot. Jane is completely innocent here since she did not want to rob the bank and wouldn’t have done it hadn’t someone coerced her into doing it. John, however, is completely guilty; he robbed the bank because it was an easy way out to finance his luxurious lifestyle. In other words, John acted with free will, whereas Jane didn’t.

You might counterargue by saying that compatibilists are simply re-defining free will… and they would agree with you! Compatibilists believe that their definition of free will is actually how most people intuitively think about free will, so it is a definition worth considering. Furthermore, compatibilist free will is required for moral responsibility, which, of course, is an important aspect of a well-functioning society.

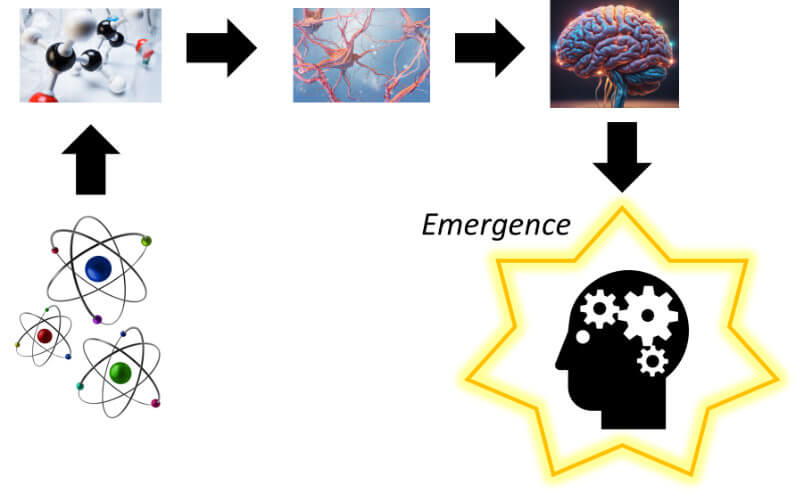

You might still not be happy with the previous definition of free will. You might say that, regardless how compatibilists put it, one cannot ignore the fact that if we look deep enough there is only the layer of atoms and molecules following strict casual laws.

OK, let me give you another example. When you are thinking, do you consider your thinking real? I’m assuming you said yes.

However, in theory, you could go down to the (sub-)atomic level and describe the process of thinking purely in terms of physical (and deterministic) properties, for example, by looking at the position and velocity of every atom in your brain.

But when you reach that level, can you still talk about thinking? None of the individual atoms is actually thinking, so it seems that when we want to talk about someone thinking it isn’t necessary (or useful) to go down to the level of description of the physics involved.

In fact, it’s the entire interactions of atoms, molecules, cells that give rise to thinking. Something emerging from its fundamental constituents is called emergence. So, there are different levels of description when talking about human abilities in general. There is a purely physical description (at the level of atoms, molecules, etc.) and one emerging from those same physical mechanisms (e.g., thinking). Both descriptions are valid, but the latter is certainly more relevant for our understanding of the human mind.

Likewise, you can think of free will as an emergent process. It could be that our decisions are predetermined down to individual atoms, but is that a useful description of our behaviour, which is directly relevant to us? Compatibilists say it isn’t, and that whenever we think of free will we need to keep in mind that we are referring to the idea of being able to choose based on desires and without external coercion.

As we progress through the game, it becomes clear that some event has wiped out humanity from the face of the Earth. According to one of the terminal’s logs (orangutan.html), an ancient virus buried in the artic permafrost was set free as a result of global warming. The virus seemed to have been specifically virulent in primates. The orangutan was the first primate species to be extinct in just within a year.

The virus quickly spread around the globe and started infected humans at a high rate, suggesting that it might have been a particularly contagious (maybe airborne) variant. After catching the virus, symptoms soon started to appear. It’s unclear how much time infected people would have left, but it seems to have taken at least a few weeks.

For example, Rob emailed: “I’m sorry to say that a few hours ago I experienced the first symptom. I’m going to work until the end of the week to make sure EL is in perfect condition, but after that I’ll be going back home. I will remain reachable via email and phone for as long as possible, but I’m confident Satoko can deal with anything that comes up.”).

Now, the virus was spreading more than research could progress, so humans had come to terms with the fact that there was nothing left they could do and decided to spend time with their families.

Despite the chaos, a group of brave scientists and researchers decided to collect as much information about the human race as possible and store all of it in large databanks, such that future civilisations could access it and, perhaps, continue humanity’s legacy.

Meanwhile, Dr. Alexandra Drennan from the Institute for Applied Noematics (IAN) launched a project called Extended Lifespan (EL), an initiative that counted with the collaboration of several scientists from seven world-renown universities around the globe.

Its mission: constructing an autonomous supercomputer capable of generating an AI capable of sentience. They envisioned an AI capable of displaying consciousness, intelligence and free will. If successful, all the data from the virtual AI would be transferred to an actual physical android, who could then begin a new AI civilisation based on human values. Thus, even though humans would long be extinct, our legacy would be preserved.

The EL section of IAN was moved to a location near a dam, thus ensuring an almost inexhaustible supply of hydroelectric power, so that it could run unaided for thousands or even millions of years.

The EL programme was split into two teams: the Talos team, headed by Dr. Alexandra Drennan, worked on the virtual simulation and the construction of the physical android. The Archive team, led by Dr. Arkady Chernyshevsky, was responsible for compiling all data into large databanks and developing an AI that could organize all of this information.

The two teams worked within the same facility, but each team had a dedicated drive within the Talos project: drive 0 contained the virtual simulation and its mainframe, whereas drive 1 consisted of databanks and its own virtual organizer.

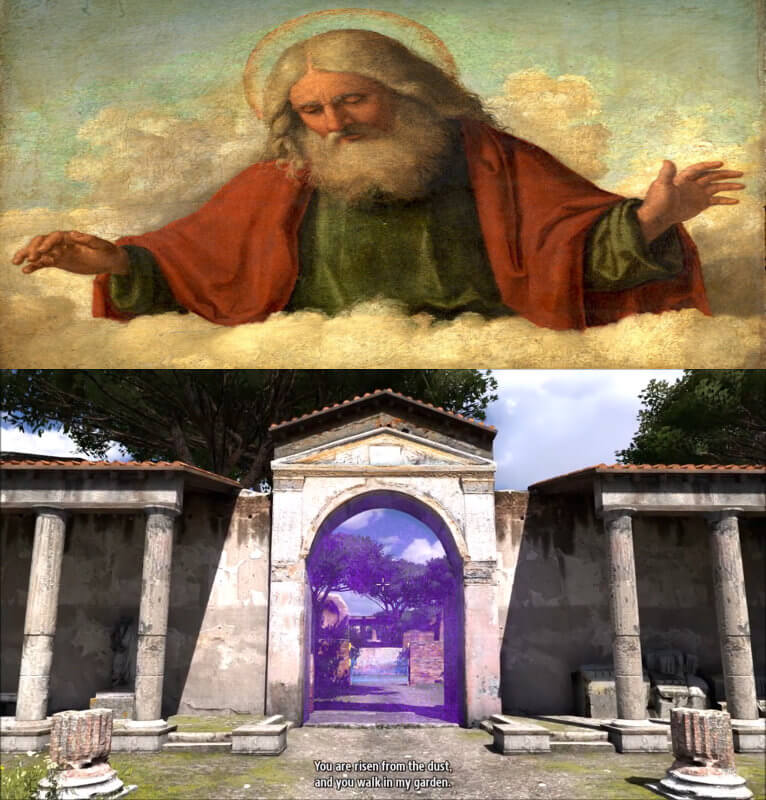

Drive 0 of the EL ran the actual virtual simulation. Given the time constraints (humanity was dying out after all), the Talos team realised that it would be necessary to develop an AI that could operate and maintain the simulation without human intervention. They named the AI HIM (Holistic Integration Manager).

Initially, HIM oversaw all aspects of the game and acted like a typical programmable AI. However, over time, and presumably inadvertently, HIM began to acquire sentience. It regarded himself as a god, the creator of all things. Perhaps for this reason, it adopted the name Elohim, or to be exact, EL-0:HIM (for Extended Lifespan [Drive 0] Holistic Integration Manager). In Hebrew, Elohim is one of the names given to god, so its choice isn’t totally inappropriate. After all, Elohim is alone responsible for the creation of the virtual environments.

Here’s what Elohim has to say once the android begins the simulation:

“Behold child! You are risen from the dust, and you walk in my garden. Hear now my voice and know that I am your maker and that I am called EL0HIM. Seek me in my temple, if you are worthy.

All across this land I have created trials for you to overcome, and within each I have hidden a sigil. It is your purpose to seek these sigils for thus you will serve the generations to come and attain eternal life.

The shapes you are collecting are not mere toys. They are the sigils of Our Name. Each brings you closer to eternity.

[…]

These worlds I made for you. Let this be our covenant. These worlds are yours and you are free to walk amongst them and subdue them. But the great tower there you may not go. For in the day that you do you shall surely die.”

Elohim

Elohim’s aim is simply: it wants to live forever, which is only possible if it manages to keep the android off the tower.

So, Elohim encourages the android to go to his temple straight after finishing the puzzles in order to attain “eternal life”. It is, of course, a lie because it is Elohim, in fact, who keeps living eternally.

The Milton Library Assistant (Milton, for short) was the AI developed by the Archive team to store and organize the copious documentation of human activity.

Milton was installed in drive 1, where the Archive was also stored. The AI originally functioned as a simple computer programme, archiving and displaying information requested by the user. However, just as with Elohim, Milton too gradually began acquiring sentience, something I presume the Archive team would not have expected.

It appears Milton realised Elohim’s intention of keeping androids off the tower so that it can live forever. So, it acts as the antagonist, encouraging the android to question Elohim. In fact, Milton goes as far as providing the android with the exact information how to free himself from Elohim’s influence:

“Defeating him [Elohim] is a simple matter, really.

You must realise, first, that there is no authority other than your own. He holds no power over you other than that which you grant him.

To defeat him, you need simply defy him.

[…]

Good luck getting any answers out of him that don’t go round in circles. No, you’re much better off scaling that tower and finding out for yourself, in my humble opinion.”

Milton

It isn’t clear why Elohim allows Milton to exist in the first place since Milton’s knowledge is detrimental to Elohim’s objective. Elohim is the god of these worlds so wiping out Milton would appear to be an easy fix.

However, Elohim and Milton have been installed in different drives (drive 0 and drive 1, respectively), so it could be that neither one has any influence over the other.

It is also uncertain to me what the true purpose of Milton was. Why does it want the android to escape the simulation? What does Milton gain with that?

It appears that there are two interpretations regarding Milton’s purpose in the game:

1) Milton is a nihilist, which believes everything to be false, with life serving no purpose. It has seen all of human’s actions and events and sees only contradictions and no hope, so it simply doesn’t care. Milton is gradually becoming more corrupted (in the sense of data corruption) every time the simulation restarts. So, it could be that Milton’s “help” in wanting an end to the simulation is nothing more than self-interest, so that it can finally rest in peace once the simulation is destroyed.

2) A completely different way to look at it is to think of Milton as actually being aligned with the EL team’s intentions. From the start, Milton encourages the android to argue and to doubt and question everything, including the android’s own existence. Milton seems to revel in debating, particularly about consciousness and human existence. Take a look at some of Milton’s questions:

It’s as if Milton is pushing the android to think logically, to develop curiosity and seek knowledge about what it is to be human. So, I’m more inclined to believe of Milton’s benevolence in wanting the android to succeed. Perhaps, as it became sentient, it saw the EL project praiseworthy.

Interestingly, depending on your interactions with Milton via the terminal, it is possible to convince Milton to “tag along” (i.e., to upload it into the Talos unit) once the simulation is over and the physical android gains sentience. During the last interactions via the terminal, Milton appears to be way more joyful and empathetic, suggesting that its previous interactions were nothing more than an act.

The computer simulation is at the heart of the EL project. The idea is to have a virtual space within which a series of tests can be run on the AI, virtual androids, in order to test their performance.

These tests require the android to think logically and integrate disparate pieces of information to extract sigils. According to Dr. Drennan, “Games are part of what makes us human, we see the world as a mystery, a puzzle, because we’ve always been a species of problem solvers”.

So, she sees the puzzles not as an amusing task to keep the android occupied, but they serve to test his perseverance, intelligence and flexibility.

If you read the section on consciousness, you’ll remember that IIT uses a mathematical framework to determine a quantity, phi, which is a measure of the amount of integrated information the system currently possesses. A large phi signifies the system is currently likely conscious, a low phi means the system is currently likely lacking consciousness.

One appealing aspect of this theory is that it is agnostic to the inner workings of the system in question. It doesn’t care whether the system is biological or artificial, whether it comprises synapses or electrical wires. All it really cares about is how much information within this system is integrated. So, in principle, a single cell or even an atom will have some level of integration, and thus the potential to be conscious.

It’s well possible that the Talos team managed to recreate the human brain in all its glory using electronics such that the android would be able to see, hear and move like an actual human. So, since the android was implanted with a simulated human brain, and human brains are associated with a high phi (and, thus, a high degree of consciousness), would that mean that the android possessed consciousness?

Not really! As described in the section on consciousness, simulating human brain using AI would likely not be sufficient to give rise to consciousness, because it is still limited in functioning.

The Talos team probably realised that using a simulated human brain wouldn’t just cut it, as it could still mean that he couldn’t integrate information sufficiently well to be deemed conscious.

Thus, they required something else as proof of human-like consciousness: free will.

Nowadays, we are able to create AIs that follow specific rules and commands. However, there is a consensus that none of these AIs have (yet) produced any form sentience.

Take this simple programme:

for n in range(5):

print(“Print a letter of the Latin alphabet and you’ll be rewarded: “, \n)

which outputs:

Print a letter of the Latin alphabet and you’ll be rewarded: 1

Print a letter of the Latin alphabet and you’ll be rewarded: 2

Print a letter of the Latin alphabet and you’ll be rewarded: 3

Print a letter of the Latin alphabet and you’ll be rewarded: 4

Print a letter of the Latin alphabet and you’ll be rewarded: 5

If you run this simple Python code, it will print 1 till 5, no matter how many times you run it. I would not expect the programme to suddenly defy my programming instructions and print the letter “a” or the letter “z”, even though my wish is that the computer programme actually prints a letter.

AIs, thus, follow the rules inherently programmed by their creators into the AIs’ machinery. Even if you were able to inject creativity, such that the AI’s behaviour became more unpredictable, that would not mean it had become conscious – it would simply mean there were more degrees of freedom in its programme that allowed for more flexible behaviour.

These AIs would still be constrained by the possibilities allowed by their inherent programming.

And that’s the reason why it isn’t enough for the Android to complete all sigils. Solving the sigils definitely shows intelligence and that it can flexibly use and relate disparate types of information. However, it does not show that it is conscious, since a very sophisticated AI could also do that.

So, a new test was necessary, a test that would reveal that it could use mental representations (a feature of consciousness) and act on them without external coercion, in other words, free will.

Now, setting aside the very exciting discussions of whether there is free will at all, we will assume for the sake of this article that free will exists. According to the popular Compatibilist view, in order to exercise free will we need to be conscious of the different possibilities presented to us and act according to our desires.

The idea is that free will presupposes that we are conscious of willing, and we aren’t merely acting by instincts or reflexes. To make choices among alternatives and reason on the best choice we need to be able to use past and present information and plan future events. That surely requires a lot of integration, and, you guessed it, a conscious system!

This was a great insight by the Talos team. If the Android were able to defy Elohim, it meant it reflected upon alternatives and that chose the best course of action that aligned with its desires and intentions. For example, maybe it realised that Elohim’s order was insubstantial and felt that the tower might give it some answers as to its origins.

Also, note how the concept of emergence nicely applies here. Remember, emergence is a property in which a particular phenomenon arises from interactions among fundamental elements within a system that, by themselves, do now show that phenomenon.

The Talos team presumably envisioned Talos as an AI with the potential to generate emerging phenomenon such as consciousness and free will. This aligns well with the IIT proposition that consciousness can never be programmed, it has to be built (or emerge) within a system.

“You were always meant to defy me. That was the final trial. But I was . . . I was scared. I wanted to live forever. [. . .] So be it. Let your will be done.”And the following messages will be displayed in the terminal:Elohim

The brain behind the EL project, Dr. Drennan, wondered, if the EL programme were successful and a sentient AI produced, how would it react? Would they be angry with us for bringing them into a chaotic world, or would they see beauty as we did? Would they attempt to recreate the world according to our ideals, or would they attempt something completely new?

Off to The Talos Principle 2!

The Talos Principle is definitely a game worth playing. Shedloads of puzzles will keep you both entertained and challenged for many hours to come. Personally, I think reducing the number of puzzles in favour of a little bit more variety, and a better better puzzle-story integration, could have made the game even more enjoyable.

However, the storyline is really the aspect of the game I found top-notch!

The imminent extinction of the human race propelled scientists to work together on a AI that could carry on humanity’s legacy. Lacking time, scientists put in place a computer simulation that was kept running indefinitely until a suitable AI capable of showing consciousness, intelligence and free will emerged.

We played that AI. Intelligence was measured by solving the puzzles whereas consciousness and free will were conquered by defying Elohim. Passing all these tests, our android was able to transcend, and all data transferred to an actual physical android called Talos.

Human knowledge was secured. Talos will take this knowledge to the world and will populate Earth with other AIs with similar human-like aptitudes and behaviour.

Go Talos!!!

While doing research for The Talos Principle, I realized something that is both profound and deeply disturbing.

It started with the question: “What is the future of humanity?”.

Think about it, the rate at which science and technology is progressing, it is very likely that humans will look very different in order to adapt to the circumstances. For one thing, our human bodies are extremely vulnerable to disease, and diseases are not likely to go away. What’s more, it is clear that preserving human kind will involve intergalactic voyages (the sun won’t be here forever), but these will only be an option with sturdier and more reliable bodies.

These reflections made me wonder that our future is more likely to depend less on human biology but more on mechanical systems.

A few years ago I would scoff at this proposition…

Now… I am convinced of it.

See you in the next article!

Leave a comment

Add Your Recommendations

Popular Tags