This post is part of a larger deep dive

Curious about the role of the nature of consciousness and free will in The Talos Principle? Check out The Talos Principle Explained!

Or read the full The Talos Principle article!

This post is part of a larger deep dive

Curious about the role of the nature of consciousness and free will in The Talos Principle? Check out The Talos Principle Explained!

Or read the full The Talos Principle article!

As we progress through the game, it becomes clear that some event has wiped out humanity from the face of the Earth. According to one of the terminal’s logs (orangutan.html), an ancient virus buried in the artic permafrost was set free as a result of global warming. The virus seemed to have been specifically virulent in primates. The orangutan was the first primate species to be extinct in just within a year.

The virus quickly spread around the globe and started infected humans at a high rate, suggesting that it might have been a particularly contagious (maybe airborne) variant. After catching the virus, symptoms soon started to appear. It’s unclear how much time infected people would have left, but it seems to have taken at least a few weeks.

For example, Rob emailed: “I’m sorry to say that a few hours ago I experienced the first symptom. I’m going to work until the end of the week to make sure EL is in perfect condition, but after that I’ll be going back home. I will remain reachable via email and phone for as long as possible, but I’m confident Satoko can deal with anything that comes up.”).

Now, the virus was spreading more than research could progress, so humans had come to terms with the fact that there was nothing left they could do and decided to spend time with their families.

Despite the chaos, a group of brave scientists and researchers decided to collect as much information about the human race as possible and store all of it in large databanks, such that future civilisations could access it and, perhaps, continue humanity’s legacy.

Meanwhile, Dr. Alexandra Drennan from the Institute for Applied Noematics (IAN) launched a project called Extended Lifespan (EL), an initiative that counted with the collaboration of several scientists from seven world-renown universities around the globe.

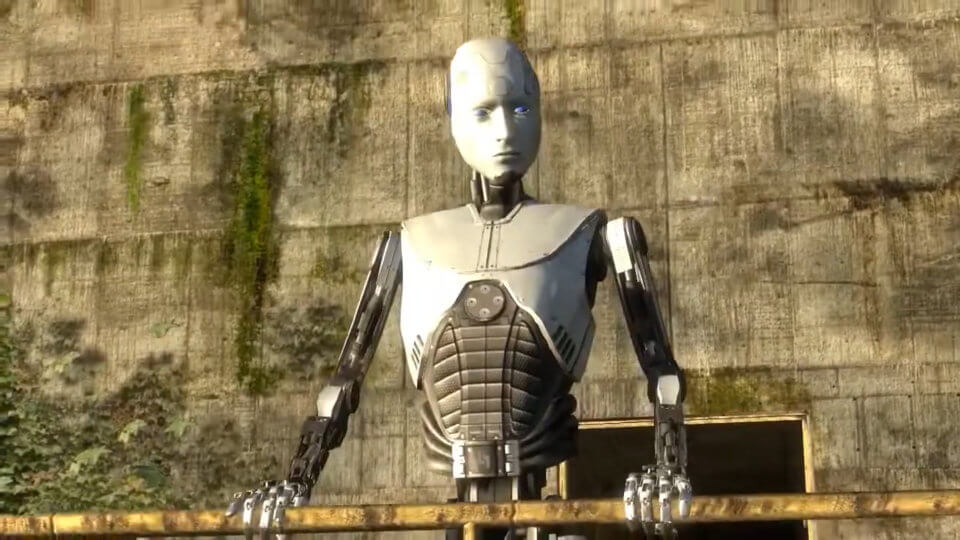

Its mission: constructing an autonomous supercomputer capable of generating an AI capable of sentience. They envisioned an AI capable of displaying consciousness, intelligence and free will. If successful, all the data from the virtual AI would be transferred to an actual physical android, who could then begin a new AI civilisation based on human values. Thus, even though humans would long be extinct, our legacy would be preserved.

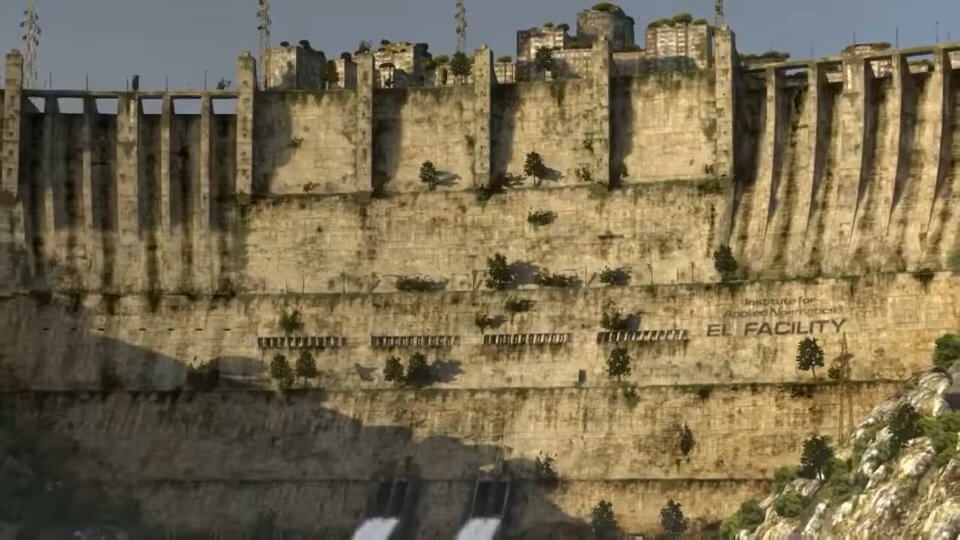

The EL section of IAN was moved to a location near a dam, thus ensuring an almost inexhaustible supply of hydroelectric power, so that it could run unaided for thousands or even millions of years.

The EL programme was split into two teams: the Talos team, headed by Dr. Alexandra Drennan, worked on the virtual simulation and the construction of the physical android. The Archive team, led by Dr. Arkady Chernyshevsky, was responsible for compiling all data into large databanks and developing an AI that could organize all of this information.

The two teams worked within the same facility, but each team had a dedicated drive within the Talos project: drive 0 contained the virtual simulation and its mainframe, whereas drive 1 consisted of databanks and its own virtual organizer.

Drive 0 of the EL ran the actual virtual simulation. Given the time constraints (humanity was dying out after all), the Talos team realised that it would be necessary to develop an AI that could operate and maintain the simulation without human intervention. They named the AI HIM (Holistic Integration Manager).

Initially, HIM oversaw all aspects of the game and acted like a typical programmable AI. However, over time, and presumably inadvertently, HIM began to acquire sentience. It regarded himself as a god, the creator of all things. Perhaps for this reason, it adopted the name Elohim, or to be exact, EL-0:HIM (for Extended Lifespan [Drive 0] Holistic Integration Manager). In Hebrew, Elohim is one of the names given to god, so its choice isn’t totally inappropriate. After all, Elohim is alone responsible for the creation of the virtual environments.

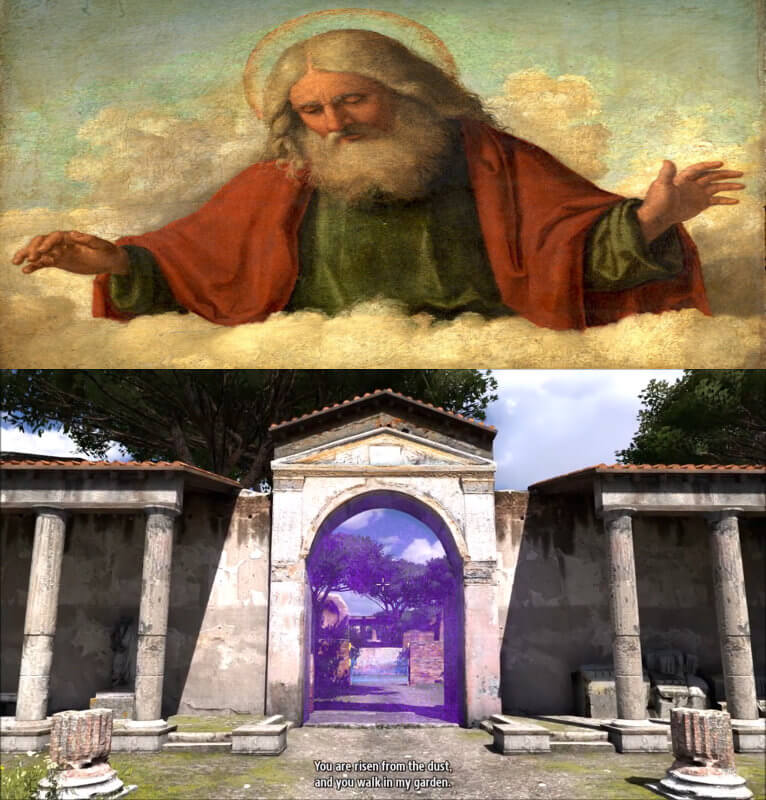

Here’s what Elohim has to say once the android begins the simulation:

“Behold child! You are risen from the dust, and you walk in my garden. Hear now my voice and know that I am your maker and that I am called EL0HIM. Seek me in my temple, if you are worthy.

All across this land I have created trials for you to overcome, and within each I have hidden a sigil. It is your purpose to seek these sigils for thus you will serve the generations to come and attain eternal life.

The shapes you are collecting are not mere toys. They are the sigils of Our Name. Each brings you closer to eternity.

[…]

These worlds I made for you. Let this be our covenant. These worlds are yours and you are free to walk amongst them and subdue them. But the great tower there you may not go. For in the day that you do you shall surely die.”

Elohim

Elohim’s aim is simply: it wants to live forever, which is only possible if it manages to keep the android off the tower.

So, Elohim encourages the android to go to his temple straight after finishing the puzzles in order to attain “eternal life”. It is, of course, a lie because it is Elohim, in fact, who keeps living eternally.

The Milton Library Assistant (Milton, for short) was the AI developed by the Archive team to store and organize the copious documentation of human activity.

Milton was installed in drive 1, where the Archive was also stored. The AI originally functioned as a simple computer programme, archiving and displaying information requested by the user. However, just as with Elohim, Milton too gradually began acquiring sentience, something I presume the Archive team would not have expected.

It appears Milton realised Elohim’s intention of keeping androids off the tower so that it can live forever. So, it acts as the antagonist, encouraging the android to question Elohim. In fact, Milton goes as far as providing the android with the exact information how to free himself from Elohim’s influence:

“Defeating him [Elohim] is a simple matter, really.

You must realise, first, that there is no authority other than your own. He holds no power over you other than that which you grant him.

To defeat him, you need simply defy him.

[…]

Good luck getting any answers out of him that don’t go round in circles. No, you’re much better off scaling that tower and finding out for yourself, in my humble opinion.”

Milton

It isn’t clear why Elohim allows Milton to exist in the first place since Milton’s knowledge is detrimental to Elohim’s objective. Elohim is the god of these worlds so wiping out Milton would appear to be an easy fix.

However, Elohim and Milton have been installed in different drives (drive 0 and drive 1, respectively), so it could be that neither one has any influence over the other.

It is also uncertain to me what the true purpose of Milton was. Why does it want the android to escape the simulation? What does Milton gain with that?

It appears that there are two interpretations regarding Milton’s purpose in the game:

1) Milton is a nihilist, which believes everything to be false, with life serving no purpose. It has seen all of human’s actions and events and sees only contradictions and no hope, so it simply doesn’t care. Milton is gradually becoming more corrupted (in the sense of data corruption) every time the simulation restarts. So, it could be that Milton’s “help” in wanting an end to the simulation is nothing more than self-interest, so that it can finally rest in peace once the simulation is destroyed.

2) A completely different way to look at it is to think of Milton as actually being aligned with the EL team’s intentions. From the start, Milton encourages the android to argue and to doubt and question everything, including the android’s own existence. Milton seems to revel in debating, particularly about consciousness and human existence. Take a look at some of Milton’s questions:

It’s as if Milton is pushing the android to think logically, to develop curiosity and seek knowledge about what it is to be human. So, I’m more inclined to believe of Milton’s benevolence in wanting the android to succeed. Perhaps, as it became sentient, it saw the EL project praiseworthy.

Interestingly, depending on your interactions with Milton via the terminal, it is possible to convince Milton to “tag along” (i.e., to upload it into the Talos unit) once the simulation is over and the physical android gains sentience. During the last interactions via the terminal, Milton appears to be way more joyful and empathetic, suggesting that its previous interactions were nothing more than an act.

The computer simulation is at the heart of the EL project. The idea is to have a virtual space within which a series of tests can be run on the AI, virtual androids, in order to test their performance.

These tests require the android to think logically and integrate disparate pieces of information to extract sigils. According to Dr. Drennan, “Games are part of what makes us human, we see the world as a mystery, a puzzle, because we’ve always been a species of problem solvers”.

So, she sees the puzzles not as an amusing task to keep the android occupied, but they serve to test his perseverance, intelligence and flexibility.

If you read the section on consciousness, you’ll remember that IIT uses a mathematical framework to determine a quantity, phi, which is a measure of the amount of integrated information the system currently possesses. A large phi signifies the system is currently likely conscious, a low phi means the system is currently likely lacking consciousness.

One appealing aspect of this theory is that it is agnostic to the inner workings of the system in question. It doesn’t care whether the system is biological or artificial, whether it comprises synapses or electrical wires. All it really cares about is how much information within this system is integrated. So, in principle, a single cell or even an atom will have some level of integration, and thus the potential to be conscious.

It’s well possible that the Talos team managed to recreate the human brain in all its glory using electronics such that the android would be able to see, hear and move like an actual human. So, since the android was implanted with a simulated human brain, and human brains are associated with a high phi (and, thus, a high degree of consciousness), would that mean that the android possessed consciousness?

Not really! As described in the section on consciousness, simulating human brain using AI would likely not be sufficient to give rise to consciousness, because it is still limited in functioning.

The Talos team probably realised that using a simulated human brain wouldn’t just cut it, as it could still mean that he couldn’t integrate information sufficiently well to be deemed conscious.

Thus, they required something else as proof of human-like consciousness: free will.

Nowadays, we are able to create AIs that follow specific rules and commands. However, there is a consensus that none of these AIs have (yet) produced any form sentience.

Take this simple programme:

for n in range(5):

print(“Print a letter of the Latin alphabet and you’ll be rewarded: “, \n)

which outputs:

Print a letter of the Latin alphabet and you’ll be rewarded: 1

Print a letter of the Latin alphabet and you’ll be rewarded: 2

Print a letter of the Latin alphabet and you’ll be rewarded: 3

Print a letter of the Latin alphabet and you’ll be rewarded: 4

Print a letter of the Latin alphabet and you’ll be rewarded: 5

If you run this simple Python code, it will print 1 till 5, no matter how many times you run it. I would not expect the programme to suddenly defy my programming instructions and print the letter “a” or the letter “z”, even though my wish is that the computer programme actually prints a letter.

AIs, thus, follow the rules inherently programmed by their creators into the AIs’ machinery. Even if you were able to inject creativity, such that the AI’s behaviour became more unpredictable, that would not mean it had become conscious – it would simply mean there were more degrees of freedom in its programme that allowed for more flexible behaviour.

These AIs would still be constrained by the possibilities allowed by their inherent programming.

And that’s the reason why it isn’t enough for the Android to complete all sigils. Solving the sigils definitely shows intelligence and that it can flexibly use and relate disparate types of information. However, it does not show that it is conscious, since a very sophisticated AI could also do that.

So, a new test was necessary, a test that would reveal that it could use mental representations (a feature of consciousness) and act on them without external coercion, in other words, free will.

Now, setting aside the very exciting discussions of whether there is free will at all, we will assume for the sake of this article that free will exists. According to the popular Compatibilist view, in order to exercise free will we need to be conscious of the different possibilities presented to us and act according to our desires.

The idea is that free will presupposes that we are conscious of willing, and we aren’t merely acting by instincts or reflexes. To make choices among alternatives and reason on the best choice we need to be able to use past and present information and plan future events. That surely requires a lot of integration, and, you guessed it, a conscious system!

This was a great insight by the Talos team. If the Android were able to defy Elohim, it meant it reflected upon alternatives and that chose the best course of action that aligned with its desires and intentions. For example, maybe it realised that Elohim’s order was insubstantial and felt that the tower might give it some answers as to its origins.

Also, note how the concept of emergence nicely applies here. Remember, emergence is a property in which a particular phenomenon arises from interactions among fundamental elements within a system that, by themselves, do now show that phenomenon.

The Talos team presumably envisioned Talos as an AI with the potential to generate emerging phenomenon such as consciousness and free will. This aligns well with the IIT proposition that consciousness can never be programmed, it has to be built (or emerge) within a system.

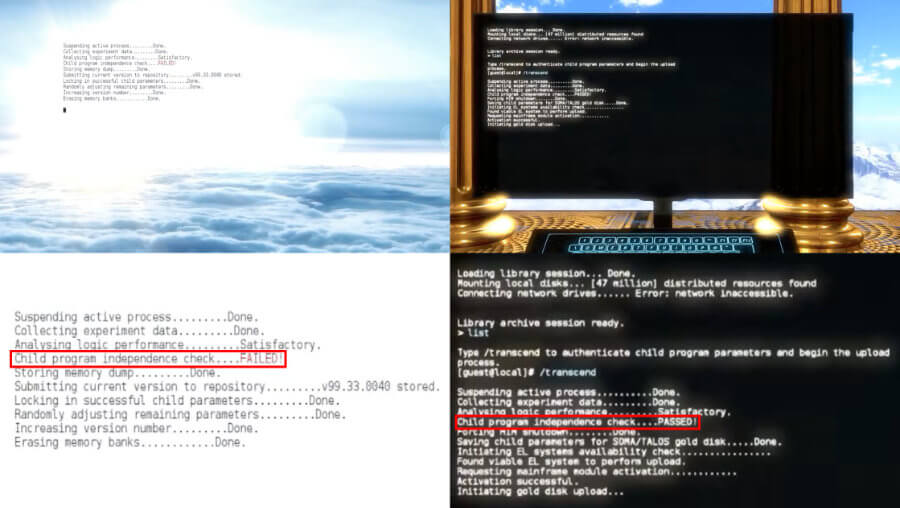

“You were always meant to defy me. That was the final trial. But I was . . . I was scared. I wanted to live forever. [. . .] So be it. Let your will be done.”And the following messages will be displayed in the terminal:Elohim

The brain behind the EL project, Dr. Drennan, wondered, if the EL programme were successful and a sentient AI produced, how would it react? Would they be angry with us for bringing them into a chaotic world, or would they see beauty as we did? Would they attempt to recreate the world according to our ideals, or would they attempt something completely new?

Off to The Talos Principle 2!

The Talos Principle is definitely a game worth playing. Shedloads of puzzles will keep you both entertained and challenged for many hours to come. Personally, I think reducing the number of puzzles in favour of a little bit more variety, and a better better puzzle-story integration, could have made the game even more enjoyable.

However, the storyline is really the aspect of the game I found top-notch!

The imminent extinction of the human race propelled scientists to work together on a AI that could carry on humanity’s legacy. Lacking time, scientists put in place a computer simulation that was kept running indefinitely until a suitable AI capable of showing consciousness, intelligence and free will emerged.

We played that AI. Intelligence was measured by solving the puzzles whereas consciousness and free will were conquered by defying Elohim. Passing all these tests, our android was able to transcend, and all data transferred to an actual physical android called Talos.

Human knowledge was secured. Talos will take this knowledge to the world and will populate Earth with other AIs with similar human-like aptitudes and behaviour.

Go Talos!!!

While doing research for The Talos Principle, I realized something that is both profound and deeply disturbing.

It started with the question: “What is the future of humanity?”.

Think about it, the rate at which science and technology is progressing, it is very likely that humans will look very different in order to adapt to the circumstances. For one thing, our human bodies are extremely vulnerable to disease, and diseases are not likely to go away. What’s more, it is clear that preserving human kind will involve intergalactic voyages (the sun won’t be here forever), but these will only be an option with sturdier and more reliable bodies.

These reflections made me wonder that our future is more likely to depend less on human biology but more on mechanical systems.

A few years ago I would scoff at this proposition…

Now… I am convinced of it.

See you in the next article!

Leave a comment

Add Your Recommendations

Popular Tags